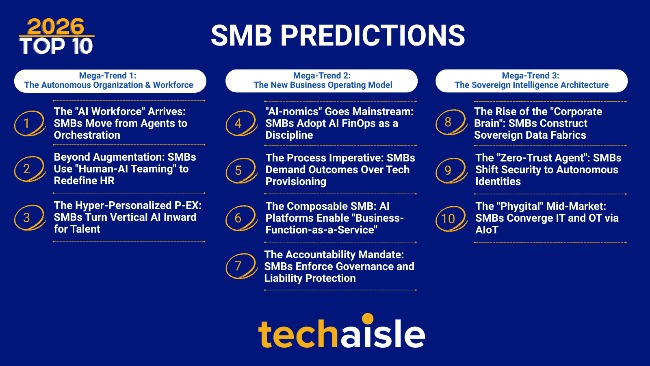

Techaisle’s 2025 SMB predictions captured the critical first-wave adoption of AI-driven capabilities within the SMB and mid-market. Themes like Agentic AI, Embedded AI-aaS, and AI-First Workplaces set the stage for transformation.

For 2026 and beyond, our analysis evolves from this foundation to focus on the inevitable consequences. The central strategic challenge for SMBs will no longer be if they should adopt AI, but how they will manage the resulting complexity. This new landscape will be defined by the economic realities of AI ("AI-nomics"), the orchestration of autonomous AI workforces, and the critical need for sovereign data intelligence. The following 10 predictions detail this next wave of opportunity, organized into three strategic mega-trends. To illustrate this evolution, each 2026 prediction is accompanied by its corresponding foundational 2025 trend for context.