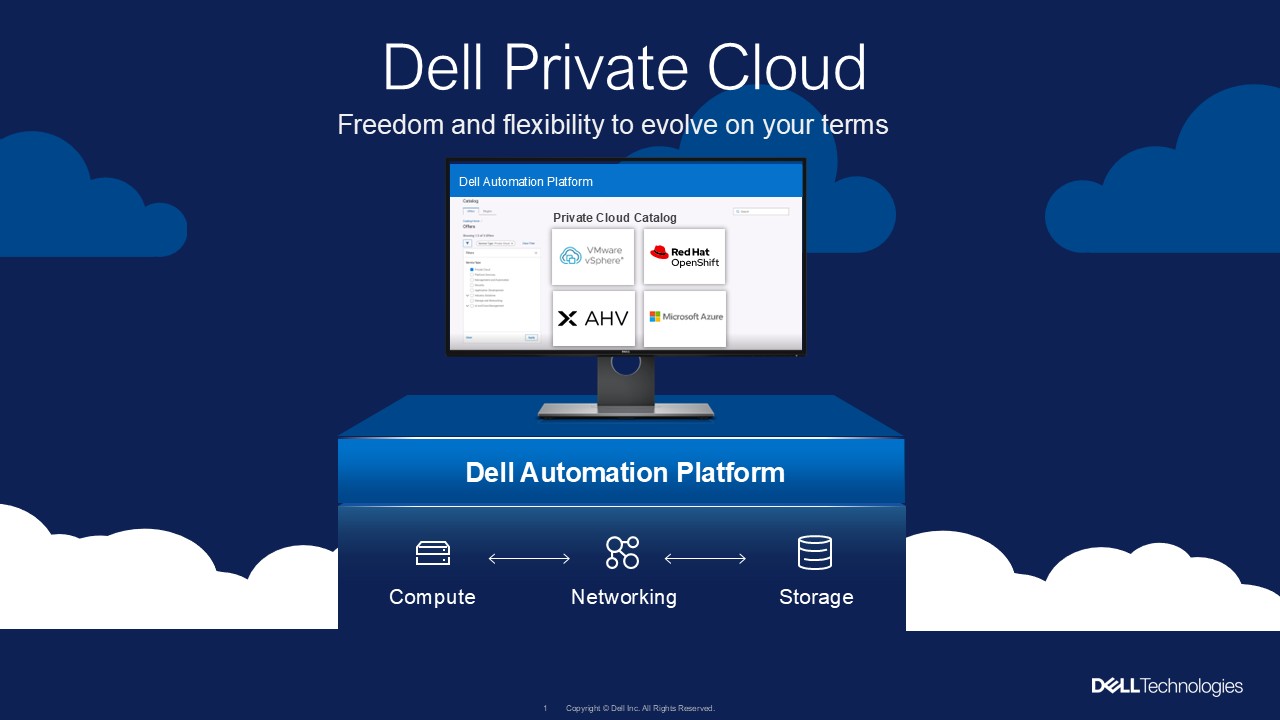

Dell is not just selling a new stack. It is selling the right to change your mind.

The Strategic Shift to Disaggregated Efficiency

For over a decade, the hyperconverged infrastructure (HCI) narrative was defined by the indivisible stack - the tight binding of compute, storage, and hypervisor into a single, locked appliance. Broadcom’s VMware restructuring and the relentless pull of AI-ready infrastructure have shattered that model. Dell Private Cloud with Nutanix support is not just a new SKU; it is a move toward infrastructure liquidity. By decoupling storage from compute and layering a unified automation engine, Dell has turned the hypervisor into a personality rather than a permanent state.

Nutanix is famous for data locality, but Dell Private Cloud intentionally redefines that mold. By utilizing external enterprise storage – PowerStore (expected Summer 2026) and PowerFlex – Dell eliminates the software-defined storage (SDS) tax, in which management traditionally consumes a lot of compute cycles and memory. In an era where hypervisor licensing is increasingly tied to core counts, wasting nearly a third of expensive, licensed CPU capacity on managing the storage layer is no longer an operational quirk. It is a financial liability.

For the enterprise, this is about standardizing SLAs across a diverse estate. Large organizations can now deliver consistent data reduction and six-nines availability across VMware, Nutanix, and OpenShift clusters using a shared storage pool. This removes the performance cliff caused by disparate data layouts across hypervisors, ensuring that a database performs identically whether it sits on AHV or ESXi. Storage ceases to be a hypervisor-dependent component and becomes a global enterprise utility.

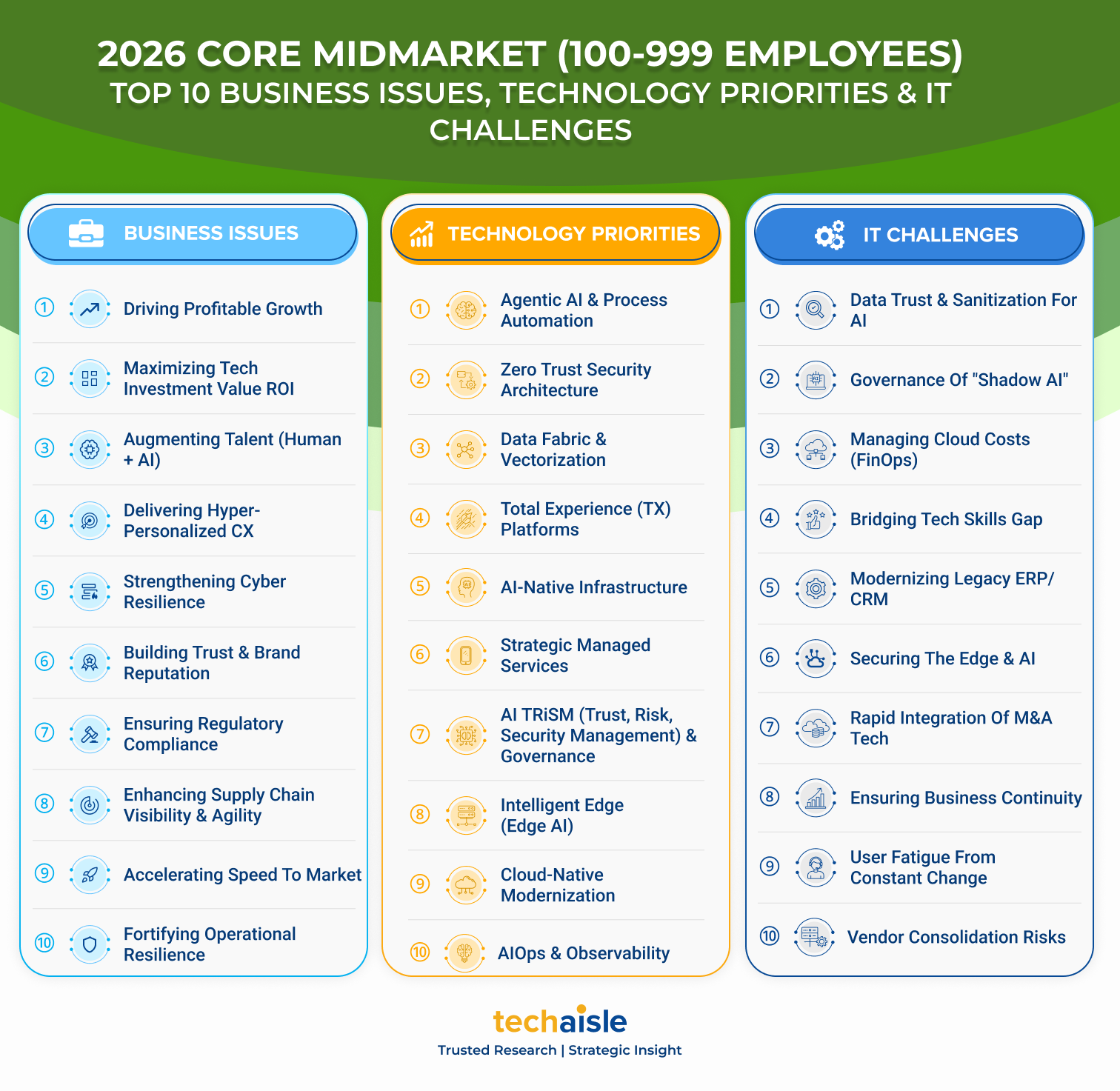

For the midmarket, this shift is a vital cost-control mechanism. As Broadcom’s licensing pivots toward high-value bundles, midmarket firms can no longer absorb the inefficiency of forced resource coupling. They can now scale storage capacity independently of compute, growing their data footprint without being forced into higher hypervisor licensing brackets.